On October 13th, 2023, the European Commission launched a stakeholder survey on the eleven draft guiding principles for Generative AI (GAI) and other advanced AI systems. This initiative comes a few days after a positive meeting between the Commission’s Vice-President, Věra Jourová, and the Japanese Minister of Finance and Minister of State for Financial Services, Shunichi Suzuki, at the 8th annual meeting of the Internet Governance Forum, organised by the United Nations.

The eleven draft guiding principles, which are being developed by the G7 members as part of the Hiroshima AI Process, aim to establish global safeguards and invite all AI actors involved in the design, development, deployment and use of advanced AI systems, such as GAI, to:

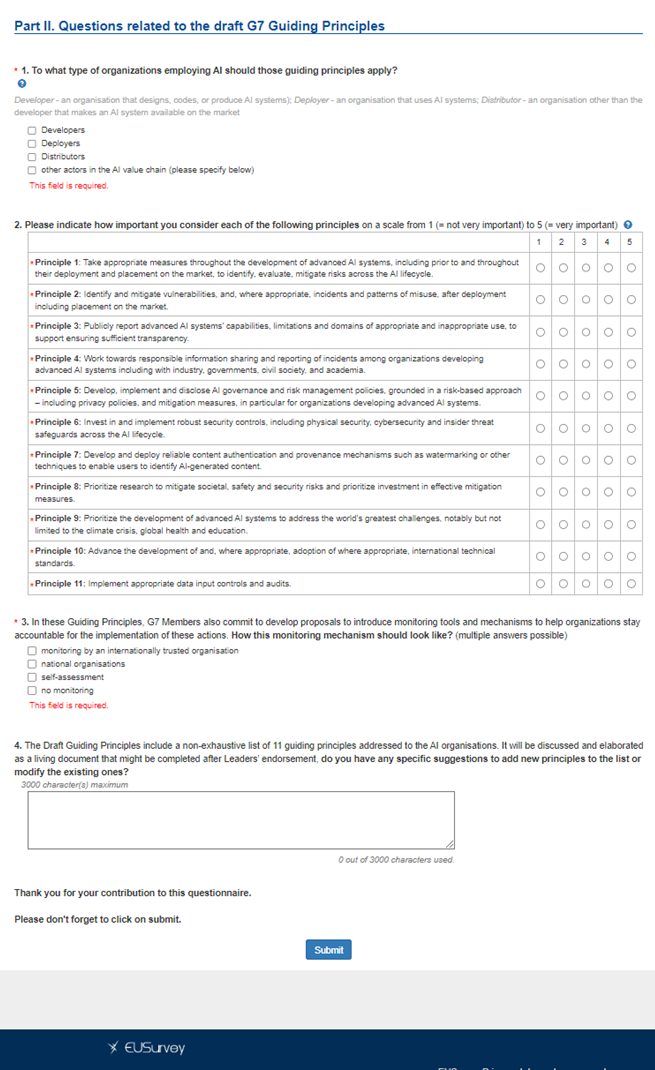

- Take appropriate measures throughout the development of advanced AI systems, including prior to and throughout their deployment and placement on the market, to identify, evaluate, mitigate risks across the AI lifecycle;

- Identify and mitigate vulnerabilities, and, where appropriate, incidents and patterns of misuse, after deployment including placement on the market;

- Publicly report advanced AI systems’ capabilities, limitations and domains of appropriate and inappropriate use, to support ensuring sufficient transparency;

- Work towards responsible information sharing and reporting of incidents among organizations developing advanced AI systems including with industry, governments, civil society, and academia.

- Develop, implement and disclose AI governance and risk management policies, grounded in a risk-based approach – including privacy policies, and mitigation measures, in particular for organizations developing advanced AI systems;

- Invest in and implement robust security controls, including physical security, cybersecurity and insider threat safeguards across the AI lifecycle;

- Develop and deploy reliable content authentication and provenance mechanisms such as watermarking or other techniques to enable users to identify AI-generated content;

- Prioritize research to mitigate societal, safety and security risks and prioritize investment in effective mitigation measures;

- Prioritize the development of advanced AI systems to address the world’s greatest challenges, notably but not limited to the climate crisis, global health and education;

- Advance the development of and, where appropriate, adoption of where appropriate, international technical standards;

- Implement appropriate data input controls and audits.

The stakeholder survey launched by the European Commission remained open for 7 days. The survey was composed of four questions:

As notes Thierry Breton, Commissioner for the Internal Market, in the Commission’s press release of October 13th, 2023, “the key principles of the AI Act serve as inspiration for international approaches to AI regulation and governance”, thus reflecting “Europe’s role as a global standard-setter”. The majority of the guiding principles being developed have already been taken into account in the EU AI Act proposal. The proposal already includes, for example, obligations concerning “testing, risk management, documentation and human oversight throughout the AI systems’ lifecycle”, as well as the requirement to inform “national competent authorities about serious incidents or malfunctioning that constitute a breach of fundamental rights obligations”.

Question 3 of the Commission’s stakeholders survey, which concerns what the monitoring mechanism “should look like”, is intended “to help organizations stay accountable for the implementation” of the aforementioned eleven draft guiding principles, and requires our attention. The establishment of monitoring tools and mechanisms is at the heart of the future EU AI Act. The European Union is therefore urging that a compliance mechanism be adopted internationally which publicly names non-compliant companies. However, the United States is firmly opposed to there being oversight of the progress with which these guiding principles are observed by companies.

Meanwhile, fifteen American tech companies have already signed up to President Joe Biden’s voluntary commitments governing AI in relation to safety, security and trust. And the EU is still debating about the final shape of the AI Act proposal.

T. Karathanasis