A fierce debate rages in Brussels over which AI systems should be considered as “High Risk”, while the systems in Annex II of the EU AI Act have attracted less attention. Here is a guide (with infographics) on the classification of ALL “High Risk” systems in the AI Act, as well as the corresponding conformity assessment procedures.

The Commission’s proposal for an EU AI Act has been debated since April 2021 following an ordinary legislative procedure (co-decision)[1] at both the Council of the EU (Council) and the European Parliament (Parliament); as a result of this debate, it is likely to be amended. On December 6th, 2022, the Council firstly adopted its amendments to the AI act proposal under the Council Approach, while the AI Act is still under consideration at the Parliament (Parliament approach). The developments provided in this paper are based principally on the Commission’s draft. The Council and Parliament approaches will be briefly invoked whenever its proposal has an impact on the classification and the conformity assessment of high-risk AI systems.

I. The High-Risk AI Systems’ Classification procedure under Article 6 of the AI Act

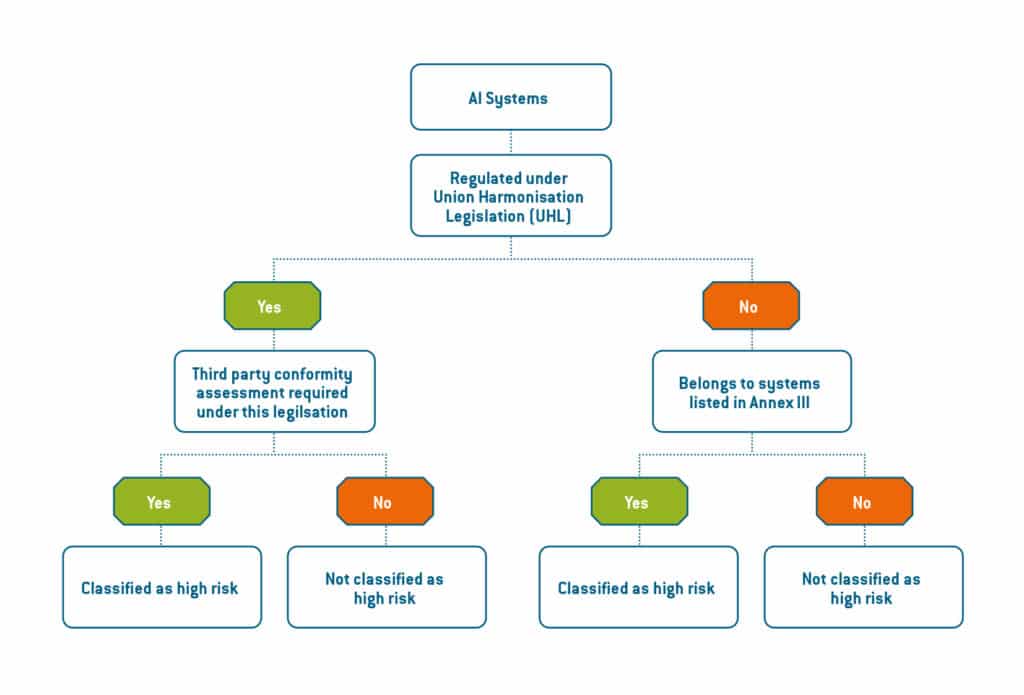

Irrespective of whether an AI system is intended to be used as a safety component of a product,[2] or is itself a product, Article 6 of the AI Act provides that whenever the AI system is intended to be used in areas regulated by the legal acts listed in Annex II or Annex III of the AI Act, it shall be considered high risk. While AI systems that are intended to be used in areas covered by Annex III of the AI Act are automatically classified as high risk (Article 6§2 of the AI Act), the classification that falls within the scope of Annex II of the AI Act is characterised by having certain conditions (Article 6§1 of the AI Act).

1. The Conditions involved in the Classification of High-Risk AI Systems for Areas Covered under Annex II

Two conditions have to be fulfilled in order that an AI system that is intended to be used as a safety component of a product, or is itself a product, can be classified as high risk, in areas regulated by the legal acts listed in Annex II. First, the intended use of the AI system should fall within the Union harmonisation legislation listed in Annex II and second, it should be required to undergo a third-party conformity assessment with a view to placing it on the market or putting it into service pursuant to the aforementioned legislation.

a. The First Condition: That it be Regulated Under the Union Harmonisation Legislation listed in Annex II

Union harmonisation legislation covers a large range of manufactured products. It sets out common requirements on how a product has to be manufactured, including rules on its size and composition. Its aim is not only to eliminate barriers in terms of the free movement of goods in the single market, but also to ensure that only safe and compliant products are sold in the EU. Non-compliant and unsafe products put people at risk and might distort competition with economic operators that sell compliant products within the Union. Against this background, the Commissions states in recital 28 of the AI Act that “the safety risks eventually generated by a product as a whole due to its digital components, including AI systems”, should be “duly prevented and mitigated”.

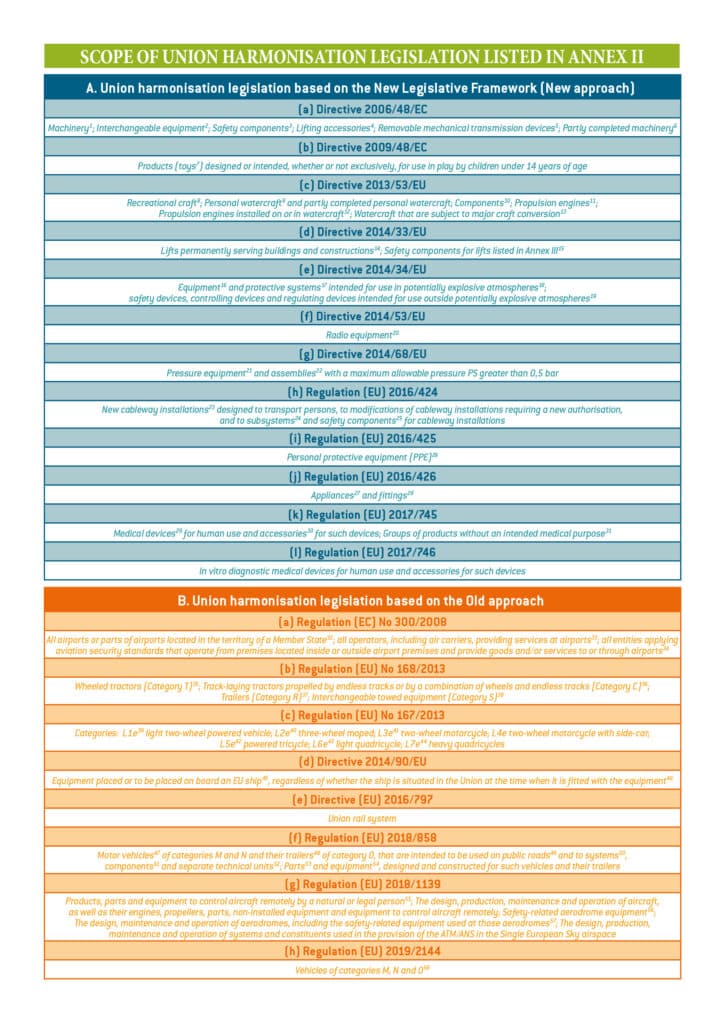

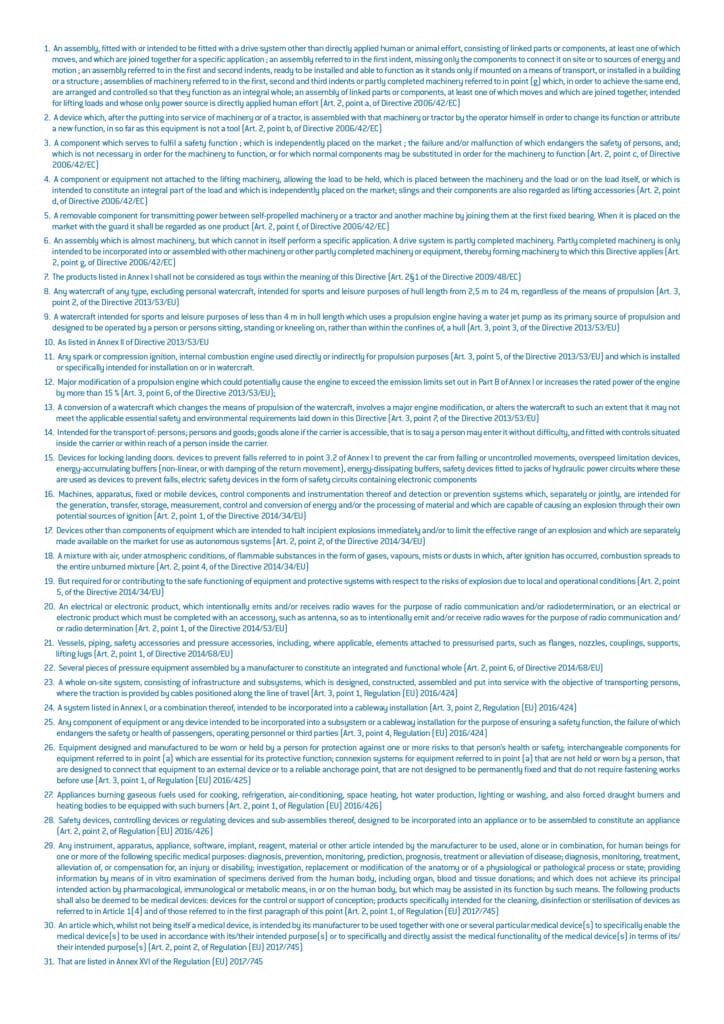

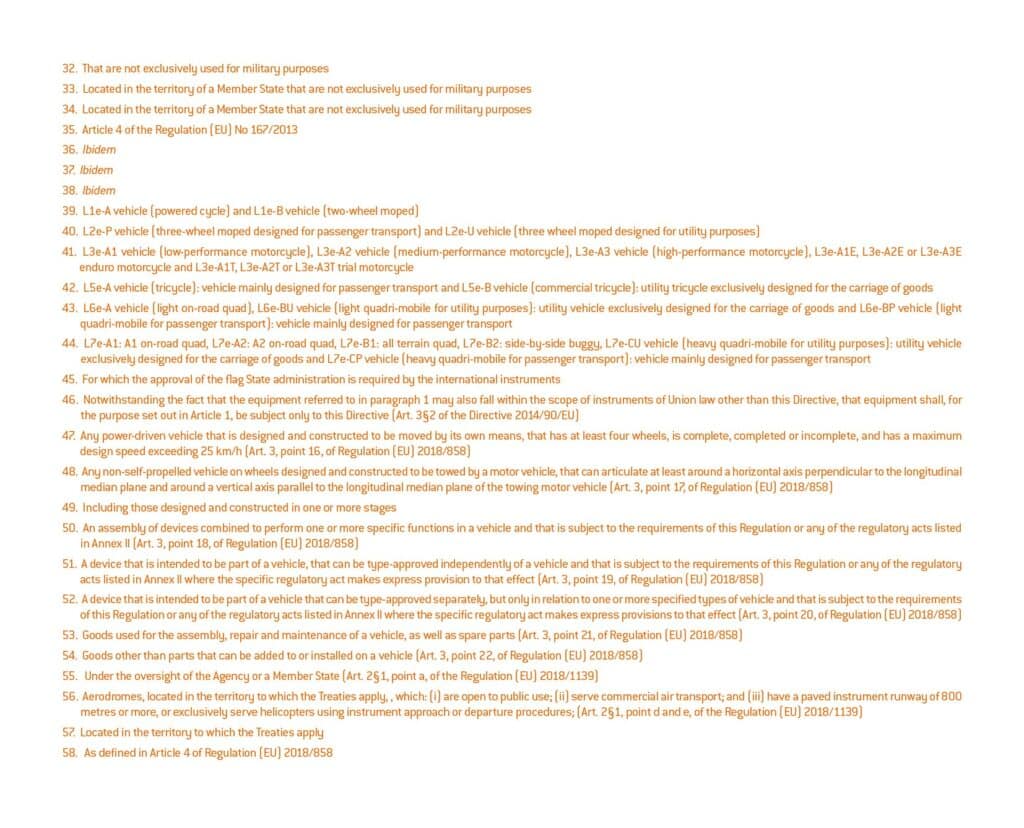

Annex II of the AI Act therefore lists a number of directives and regulations that originate from Union harmonisation legislation and that cover products that include AI systems that may be intended to be used either as a safety component or as a product. However, the Commission makes a distinction between the legal acts that are based on the New Legislative Framework approach (Section A of Annex II) and those that are based on the Old approach (Section B of Annex II). The table, which is presented at the end of this paper, provides details on the two sections as well as the products concerned.

| What is the New Legislative Framework (NLF) ? The combination of Regulation (EC) 765/2008 and Decision (EC) 768/2008 has resulted in the New Legislative Framework (NLF), which includes all of the elements required for a comprehensive regulatory framework to operate effectively in order that industrial products may be safe and compliant. This framework is intended to cope with the requirements adopted to protect the various public interests and for the proper functioning of the single market. According to the Commission’s evaluation of the NLF in late 2022, the technologically neutral regulatory framework is “perfectly suited to cope with the higher speed of technical innovation”[3]. |

This distinction relies on the interplay between the conformity assessment procedure provided by these legal instruments, and the AI Act, since additional requirements will become directly applicable and checked against the conformity assessment system that already exists under these two approaches.[4]

| A conformity assessment procedure takes place before the product can be put on the market. The objective is to demonstrate that a product placed on the market complies with all legislative requirements and that it secures the confidence of consumers, public authorities and manufacturers. |

Most of the legal acts detailed in section A provide detailed and exhaustive provisions on the conformity assessment requirements imposed on the products that they cover. According to Article 43§3 of the AI Act, providers of AI systems classified as high risk will have to comply not only with the relevant conformity assessment as required under these legal acts, but also with the requirements set out in Chapter 2, Title III of the AI Act and in points 4.3., 4.4., 4.5., 4.6§5 of Annex VII of the AI Act. At the same time, the Commission will amend the Union harmonisation legislation using the old approach (Section B, Annex II) throughout Articles 75-80 of the AI Act in order to ensure that “the mandatory requirements for high-risk AI systems, laid down in the AI Act, are considered when adopting any relevant future delegated or implementing acts on the basis of those acts”.[5]

This brings us to the second criteria involved in classifying high-risk AI systems that fall within the scope of Annex II of the AI Act: the third-party conformity assessment criteria.

b. The Third-Party Conformity Assessment Criteria

Whenever AI systems undergo a conformity assessment procedure via a third-party conformity assessment body pursuant to the relevant Union harmonisation legislation, they have to be classified as high-risk AI systems (Article 6§1 of the AI Act).

| A third-party conformity assessment should be understood, under the AI Act, as meaning the conformity assessment activities, which include testing, certification and inspection, that are conducted by a designated conformity assessment body on behalf of the national notifying authority. This therefore excludes any “in-house” conformity assessments. |

While all[6] of the legal acts listed in section A of Annex II of the AI Act, which are also based on the NLF, clearly provide for third-party conformity assessments, the other legal acts listed in section B do not, for the most part, mention such assessments. This means that the fulfilment of this criteria is open to interpretation.

Indeed, with the exception of Directive 2014/90/EU on marine equipment, and Directive (EU) 2016/797 on the interoperability of the rail system within the EU, of which it is possible to find references to third-party conformity assessments, all of the other legal acts mentioned in section B, Annex II of the AI Act make no reference to “third-party conformity assessments”. It is therefore unlikely that an AI system that is intended to be used in areas covered by these legal acts will be classified, since not all products are specifically required to undergo such a third-party conformity assessment. This is understandable since the third-party conformity assessment criteria under the AI Act has to be fulfilled, but it is not applicable in all situations.

However, Regulation (EU) 167/2013, Regulation (EU) 168/2013 and Regulation (EU) 2018/858 make reference to the term “technical service”, which is worth analysing. Following the definition provided by these three legal acts, a “technical service” means “an organisation or body designated by the approval authority as a testing laboratory to carry out tests, or as a conformity assessment body to carry out the initial assessment and other tests or inspections”. A definition that is quite similar to that of the third-party conformity assessment under the AI Act.

This leaves us with three legal acts: Regulation (EC) No 300/2008, Regulation (EU) 2018/1139 and Regulation (EU) 2019/2144. While they do not make explicit reference to third-party conformity assessments, the final two regulations refer to a certificate of conformity. It is clearly mentioned in Regulation (EU) 2018/1139 regarding common rules in the field of civil aviation, for example, that in order to be safe and environmentally compatible, the design of individual aircraft should comply with a certificate that is delivered by organisations responsible for the design and manufacture of products, parts and non-installed equipment. This is a procedure that one can reasonably imply is a third-party conformity assessment. Regarding the Regulation (EC) No 300/2008, no reference to a (third-party) conformity assessment is clearly stated.

The planned amendments to these legal acts under articles 75-80 of the AI Act will therefore ensure that the mandatory requirements for high-risk AI systems will be considered, when the Commission adopts “any relevant future delegated or implementing acts on the basis of those acts”. It will also be important however to clarify the issues concerning the term “third-party conformity assessment”.

2. The “Automatic” Classification of High-Risk AI Systems for Areas Covered under Annex III

In contrast to the procedure presented above that involves conditions, the procedure laid down in annex III is automatic since it does not involve any third-party conformity assessment criteria. In other words, each time an AI system is used in accordance with the areas listed under Annex III of the AI Act – either because it poses a health and safety risk or poses a risk to people’s fundamental rights – it will be automatically considered “high risk”.

However, it should be mentioned that the Council introduced an exception to this rule in its compromise text of December 6th, 2022. In cases where “the output of the system is purely accessory in respect of the relevant action or decision to be taken and is not therefore likely to lead to a significant risk to the health, safety or fundamental rights”, [7] the AI system should not be considered as high risk, even if it is intended to be used in accordance with the areas listed in Annex III. Therefore, over the first year following the entry into force of the AI Act, the Commission will have to adopt “implementing acts to specify the circumstances where the output of AI systems referred to in Annex III would be purely accessory in respect of the relevant action or decision to be taken”.[8] For this purpose, the AI Act sets out an obligation for the Commission to follow the examination procedure[9] provided in Article 5 of Regulation (EU) 182/2011.

The critical areas covered by Annex III of the AI Act are as follows: (a) biometric identification and categorisation of natural persons; (b) management and operation of critical infrastructure; (c) education and vocational training; (d) employment, worker management and access to self-employment; (e) access to and enjoyment of essential public and private services and benefits; (f) law enforcement; (g) migration, asylum and border control management and (h) administration of justice and democratic processes. According to the Council and the Parliament Approaches, amendments to the critical areas and specific use cases listed in Annex III are expected to be proposed.

While the Council’s Approach proposes removing the use of AI systems for the detection of deep fakes, for crime analytics and for the verification of the authenticity of travel documents and supporting documentation of natural persons, the Parliament approach is far more interventionist with regard to Annex III. The noteworthy amendments to cite are the intended use of AI systems for biometric identification and categorisation of natural persons, while a critical area concerning “other applications” was added. The Parliament is considering placing conversational and art-generating AI tools such as ChatGPT and DALL-E-2 in the high-risk category via this new critical area . “Subliminal techniques” used for therapeutic or scientific purposes may also end up in the high-risk bucket. However, the interinstitutional negotiations (known as trialogues[10]) need to be completed before the final contents of Annex III can be determined.

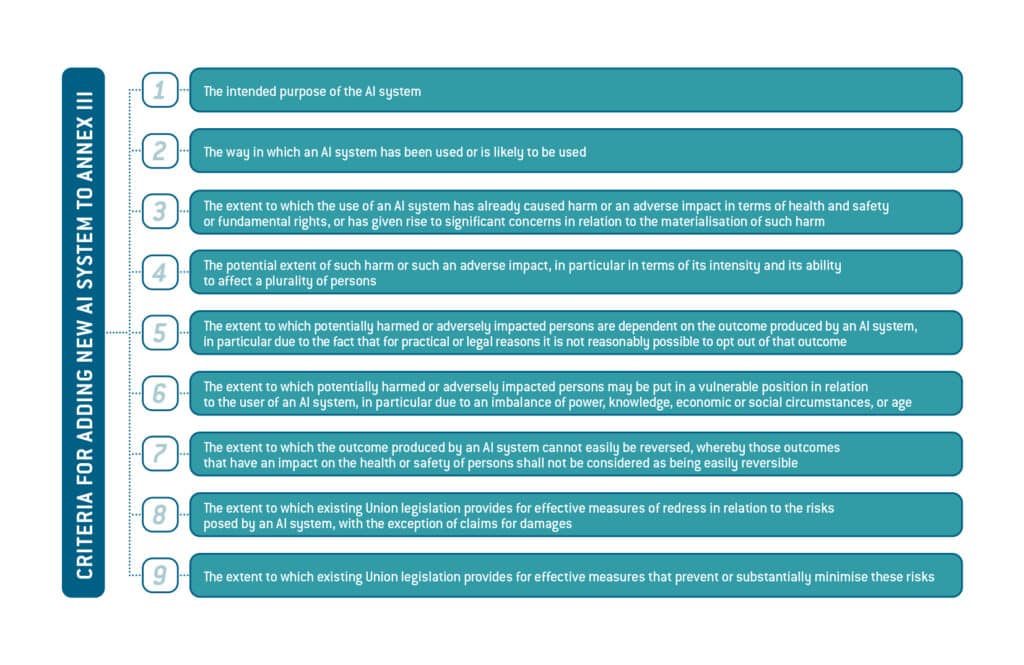

This list of critical areas included in Annex III of the AI Act is not set in stone; it may evolve. The Commission will be empowered to adopt delegated acts in accordance with Article 7 of the AI Act so that they may amend this list by adding high-risk AI systems, provided that certain conditions are fulfilled. Whether or not an AI system poses a health and safety risk, or a risk to people’s fundamental rights, which is equivalent to or greater than the risk posed by the high-risk AI systems already referred to in Annex III, nine criteria should be taken into consideration by the Commission with regard to adding a new AI system to Annex III.[11]

It is worth noting here that the Commission’s proposal for the AI Act does not contain any rules for removing high-risk AI systems from Annex III, once the aforementioned conditions are no longer being fulfilled. This issue is addressed in the Council’s Approach and the addition of a third paragraph to Article 7 of the AI Act has been proposed. This paragraph provides the Commission with the option of removing items from Annex III if two conditions are fulfilled.[12] Firstly, the high-risk AI system concerned should no longer pose any significant risks to fundamental rights, health or safety, taking into account the criteria listed in paragraph 2 of Article 7 of the AI Act, and secondly, the removal should not decrease the overall level of protection of health, safety and fundamental rights under Union law. On February 1st, 2023, the establishment of a “regulatory dialogue with the competent authority in case the AI developers ask for their systems to be excluded from the high-risk category under Annex III” was also at the centre of the debate at the Parliament.

II. Conformity Assessment Procedure and Derogation (Articles 43 and 47 of the AI Act)

A conformity assessment procedure takes place before the product can be put on the market. The objective is to demonstrate that a product placed on the market complies with all legislative requirements and that it secures the confidence of consumers, public authorities and manufacturers. The purpose of this section is to enable understanding of the fact that the classification of high-risk AI systems under Article 6 of the AI Act affects the conformity assessment procedure that the providers will have to follow.

1. Conformity Assessment Procedure under Article 43

A distinction will be made once again in terms of whether the classification of high-risk AI systems pertains to areas covered by Annex II or Annex III of the AI Act.

a. For High-Risk AI Systems Classified Under Annex II

Most legal acts listed in Annex II provide detailed and exhaustive provisions on the conformity assessment procedure imposed on the products that they cover. However, Article 43§3 of the AI Act requires that providers of AI systems – classified as high-risk under Article 6§1 – should comply not only with the conformity assessment that concerns areas required under these legal acts but also, with the requirements set out in Chapter 2, Title III of the AI Act and in points 4.3., 4.4., 4.5., 4.6§5 of Annex VII of the AI Act. This encompasses requirements that cover the following areas : Risk Management, Data and Data Governance, Technical Documentation, Record-Keeping, Transparency and Provision of Information to Users, Human Oversight, Accuracy, Robustness and Cybersecurity.

Even if a product, or its safety component, is considered high risk under the relevant legal act listed in Annex II of the AI Act, it does not mean that this same system will automatically be considered a high-risk AI system under the AI Act (Recital 31). Regulations (EU) 2017/745 on medical devices and (EU) 2017/746[13] on in vitro diagnostic medical devices provide, for example, a third-party conformity assessment for medium risk and high-risk products. An AI-enabled medical device that is therefore considered a high-risk product under Regulation (EU) 2017/745 does not automatically mean that it is also a high-risk AI system in terms of the meaning provided by the AI Act. In order to be considered as such, the requirements set out in Article 43§3 of the AI Act have to be followed.

The same applies for opting out of a third-party conformity assessment.[14] Where a legal act – listed in section A, Annex II of the AI Act – allows a manufacturer to opt out of a third-party conformity assessment, the manufacturer may also make use of this option under the AI Act provided two conditions are fulfilled:

- the manufacturer has applied all of the harmonised standards that cover all the relevant requirements specified in the legal act under the scope of which its product falls;

- the manufacturer has applied harmonised standards or, where applicable, common specifications referred to in Article 41, covering the requirements set out in Chapter 2, Title III of the AI Act.

b. For High-Risk AI Systems Classified Under Annex III

The AI Act also requires that conformity assessments be carried out for high-risk AI systems that are intended to be used in the areas listed in Annex III. However, a distinction is made between the providers that have to follow the conformity assessment procedure based on internal control, as required under Annex VI of the AI Act, and those that have to follow the conformity assessment procedure based on a quality management system and an assessment of their technical documentation, as required under Annex VII of the AI Act.

Two categories can be distinguished according to the areas listed in Annex III: the providers of AI systems that are intended to be used in biometric identification and categorisation of natural persons and those that are intended to be used in the other areas.

Regarding remote biometric identification systems, it should be noted that the AI Act provides for a choice of two procedures defined in Annex VI and Annex VII of the AI Act. If a provider demonstrates that a high-risk AI system complies with the requirements set out in Chapter 2, Title III of the AI Act, but it has not applied or has only partially applied the harmonised standards[15], or if such harmonised standards do not exist and common specifications[16] are not available, the provider will be forced to observe the conformity assessment procedure set out in Annex VII of the AI Act. Otherwise, Annex VI should apply.

According to the Parliament approach, amendments were proposed that have a direct impact on the providers of AI systems that involve biometric identification and categorisation of natural persons. Indeed, according to these amendments, a provider of AI systems that are intended to be used for biometric identification and categorisation of natural persons is also required to follow the conformity assessment procedure set out in Annex VII: “where one or more of the harmonised standards referred to in Article 40 has been published with a restriction; when the provider considers that the nature, design, construction or purpose of the AI system necessitate third party verification, regardless of its risk level”. The long-awaited start of the interinstitutional negotiations will however be an opportunity to see if these proposed amendments will be retained or not.

For the rest of the areas provided for in points 2-8 of Annex III, the provider will have to follow the conformity assessment procedure set out in Annex VI in all cases.

2. Derogation

Article 47 of the AI Act provides that “any market surveillance authority may authorise [for a limited period] the placing on the market or putting into service of specific high-risk AI systems within the territory of the Member State concerned, for exceptional reasons of public security or the protection of life and health of persons, environmental protection and the protection of key industrial and infrastructural assets”. In terms of the Parliamentary Approach, an amendment has been proposed to Article 47 of the AI Act in order to include the judicial authority in this procedure. Removing the term “public security” from the exceptional reasons listed in Article 47 of the AI Act has also been proposed.

Such an authorisation should only be issued if the market surveillance authority concludes that the high-risk AI system complies with the requirements set out in Chapter 2, Title III of the AI Act. The market surveillance authority may issue such an authorisation if the high-risk AI system complies with these requirements. With regard to high-risk AI systems that are intended to be used as safety components in devices, or which are themselves devices, they must abide by Regulation (EU) 2017/745 and Regulation (EU) 2017/746, in which case Article 59 of Regulation (EU) 2017/745 and Article 54 of Regulation (EU) 2017/746 should also apply.

Once the authorisation has been issued, the market surveillance authority is required to notify the Commission and the other Member States. Article 47 does not provide any legal redress mechanism for those providers willing to place on the market or put into service specific high-risk AI systems within the territory of the Member State concerned. A redress mechanism is however only available to the Commission or the Member States in order that they may object to the authorisation in the event that the system is found to not be compliant with the requirements set out in Chapter 2, Title III of the AI Act. In this case, the provider should be consulted and should only be afforded the option of presenting its views. Whenever the authorisation is considered unjustified, it should be withdrawn by the market surveillance authority of the Member State concerned. In terms of the Council Approach, it has been proposed to remove the paragraphs that provide the framework for this redress mechanism. Once again, the trialogues that involve the Commission, the Council and the Parliament should clarify this issue.

III. Conclusion

It should be understood from the aforementioned developments that the AI Act will certainly have a considerable impact on a wide range of products on the EU market, especially when one considers the number of areas and products that fall under the scope of the legal acts listed in Annex II, as well as the “dual” requirement of following both the conformity assessment procedures defined in the relevant legal act listed in Annex II, and in the AI Act.

As far as high-risk AI systems are concerned, the breadth of the scope of the AI Act, but also the exhaustiveness of the conformity assessment requirements and procedures, entail risks for consumer protection; especially when one considers the burdens on SMEs and start-ups willing to use AI technology in applications that are considered – or may in future be considered – high risk under the proposed AI Act.

The German Federal Council (Bundesrat) expressed, in its Resolution 488/21 of September 17th, 2021, the concern that “the capacities and competences of conformity assessment bodies can also be a bottleneck for the authorisation of certain high-risk applications”. The significant negative impact on companies and on patient care, which resulted from the lack of designated conformity assessment bodies in the case of the implementation of Regulation (EU) 2017/745 (Medical Devices Regulation) and Regulation (EU) 2017/746 (In Vitro Diagnostic Medical Devices Regulation), is cited as an example.

Consideration should be given to the fact that this requirement relies on Member States, and that significant gaps in terms of fees for conformity assessments may appear across the EU, increasing the risk of non-compliance with the conformity assessment requirements. This is an issue that has also been considered in the Parliament, since it has been proposed that the following passage should be added to Article 43 of the AI Act: “the specific interests and needs of small suppliers must be taken into account when setting the fees for third-party conformity assessment, reducing those fees proportionately to their size and market share”.

This amendment is difficult to understand since Article 55§2 of the AI Act contains almost the same statement: “the specific interests and needs of the SME providers, including start-ups, shall be taken into account by the Member States when setting the fees for conformity assessment under Article 43, reducing those fees proportionately to their size, market size and other relevant indicators”. The additional intention of the Parliament Approach may be to reduce fees for third-party conformity assessments conducted under the legal acts listed in Annex II. However, this remains an important issue that should be clarified once the trialogues are initiated.

These statements are attributable only to the author, and their publication here does not necessarily reflect the view of the other members of the AI- Regulation Chair or any partner organizations.

This work has been partially supported by MIAI @ Grenoble Alpes, (ANR-19-P3IA-0003)

[1] Article 294 of the Treaty on the Functioning of the European Union (TFEU)

[2] Irrespective of whether an AI system is placed on the market or put into service independently

[3] See European Commission, “Staff Working Document Evaluation of the New Legislative Framework”, Brussels, 11.11.2022 SWD(2022) 364 final, available at https://ec.europa.eu/transparency/documents-register/api/files/SWD(2022)364?ersIds=090166e5f3ae58d6

[4] See the requirements set out in Chapter 2, Title III of the AI Act, as well as points 4.3., 4.4., 4.5. and the fifth paragraph of point 4.6 of Annex VII

[5] Recital 29 of the AI Act

[6] Concerning Directive 2006/42/EC (known as the Machinery directive), which is listed in section A of Annex II, there is no mention of a third-party conformity assessment. However, a new draft Regulation (COM/2021/202 final), which is mentioned in the AI Act under the name of ‘Machinery Regulation’ and which is intended to replace Directive 2006/42/EC, was proposed by the Commission in 2021. This ‘Machinery Regulation’ mentions third-party conformity assessments.

[7] Article 6, para. 3 of the Councils General Approach

[8] Article 6, para. 3 of the Councils General Approach

[9] Article 6, para. 3 of the Councils General Approach and Article 74, para. 2 of the AI Act

[10] Informal tripartite meetings on legislative proposals between representatives of the Parliament, the Council and the Commission

[11] Article 7, para. 2 of the AI Act

[12] Article 7, para. 3 of the Council General Approach

[13] Concerning Regulations (EU) 2017/745 and (EU) 2017/746 exclusively, Article 47 of the AI Act states that conformity assessment procedure derogations provided by these regulations “shall apply also with regard to the derogation from the conformity assessment of the compliance with the requirements set out in Chapter 2 of this Title [of the AI Act]”

[14] Article 43, para. 3 of the AI Act

[15] As referred to in Article 40 of the AI Act

[16] As referred to in Article 41 of the AI Act