As the European Data Protection Board (EDPB) works intensively to draft the Article 64(2) GDPR Opinion—requested by the Irish Data Protection Commission (DPC)[1] and relating to artificial intelligence models—we are pleased to share with all interested stakeholders the video of the workshop we organized on this topic during the CPDP International Conference on 22 May 2024.

The panel was chaired by Prof. Theodore Christakis and featured distinguished experts: Jessica G Lee, OpenAI (US); William Malcolm, Google (UK); Yann Padova, Wilson Sonsini (BE); Félicien Vallet, CNIL (FR). Clara Clark Nevola from the Information Commissioner’s Office (ICO) participated also as a special guest.

A global reflection on how best to regulate the risks of this technology has been triggered by the spectacular development of generative AI. The trialogues on the EU AI Act were marked by arguments between the Council and the European Parliament over the regulation of “basic models”. However, in terms of data protection, privacy, and security issues, generative AI is already subject to the GDPR. Data Protection Authorities (DPAs), led by the Garante (Italy’s DPA), have expressed concerns about compliance with GDPR principles by entities developing and deploying ChatGPT and other Large Language Models (LLMs). In response to these concerns, OpenAI, Google, and other companies have revised privacy policies and taken steps to address GDPR issues.

Furthermore, the EU AI Act came into force on 1 August 2024, and the provisions relating to General Purpose AI (GPAI) will start to apply 12 months later. Significant questions remain about the interaction between the GDPR and the AI Act when it comes to regulating GPAI.

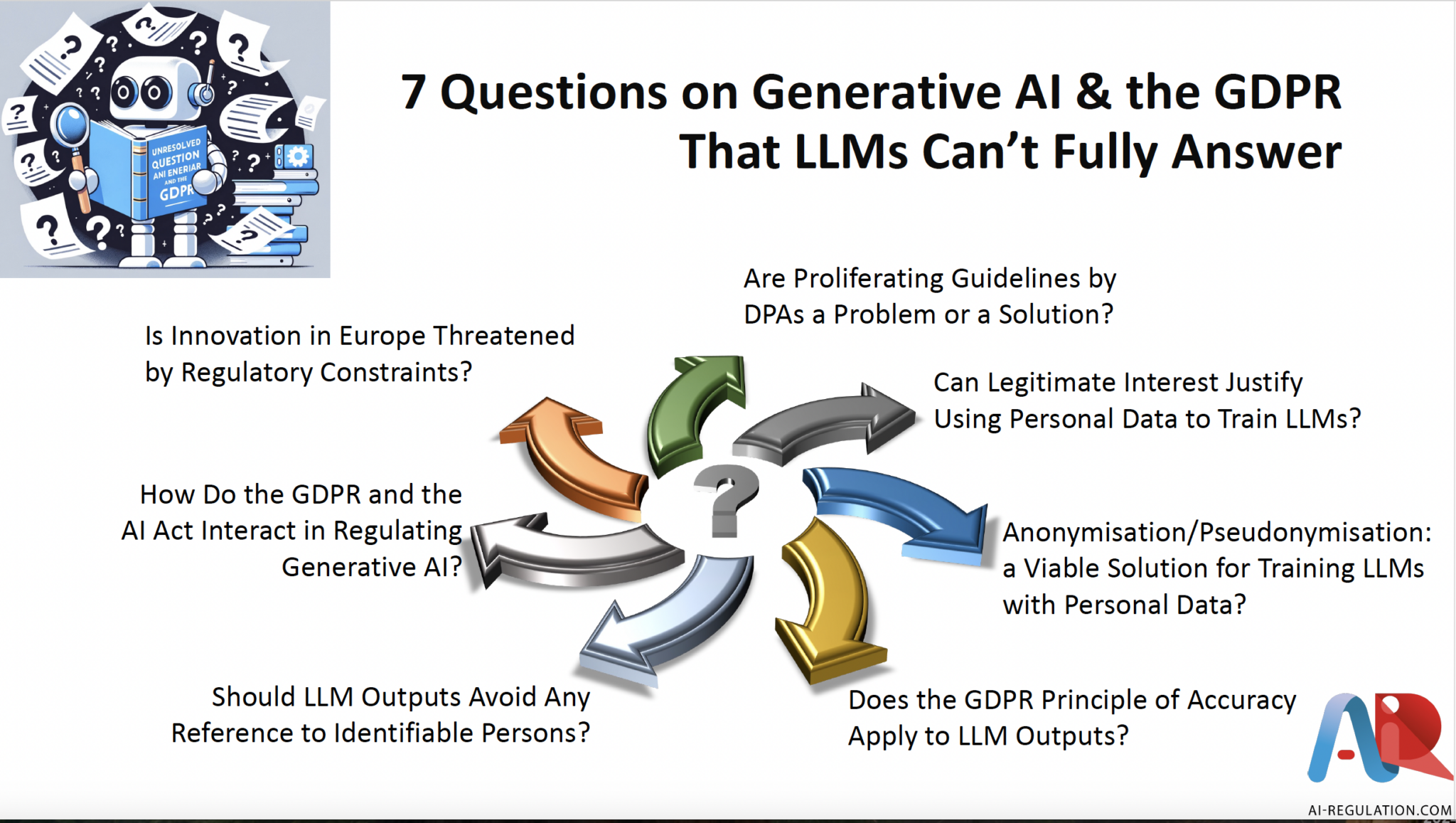

The workshop discussed the following issues:

- The effectiveness of DPAs’ proliferation of guidelines on the GDPR and GPAI;

- The use of the legitimate interest legal basis to justify web scraping for training LLMs with personal data;

- The possibility of anonymization/pseudonymization of such data as a risk mitigation measure;

- The applicability of the GDPR principle of accuracy to LLM outputs and how the GDPR deals with the issue of “AI Hallucinations”;

- The practicability of excluding any reference to identifiable individuals in LLM outputs;

- The interactions between the GDPR and the EU AI Act in regulating generative AI.

The panel discussions are available on Youtube channel and can be found here.

[1] The DPC explains that: “This request is made in order to trigger discussion and facilitate agreement, at EDPB level, on some of the core issues that arise in the context of processing for the purpose of developing and training an AI model, thereby bringing some much needed clarity into this complex area. The opinion invites the EDPB to consider, amongst other things, the extent to which personal data is processed at various stages of the training and operation of an AI model, including both first party and third party data and the related question of what particular considerations arise, in relation to the assessment of the legal basis being relied upon by the data controller to ground that processing”.